May 30, 2025

OffSec’s Take on the Global Generative AI Adoption Index

Discover OffSec’s take on the latest Global Generative AI Adoption Index report released by AWS.

Generative AI is no longer experimental; it’s becoming foundational to business strategy.

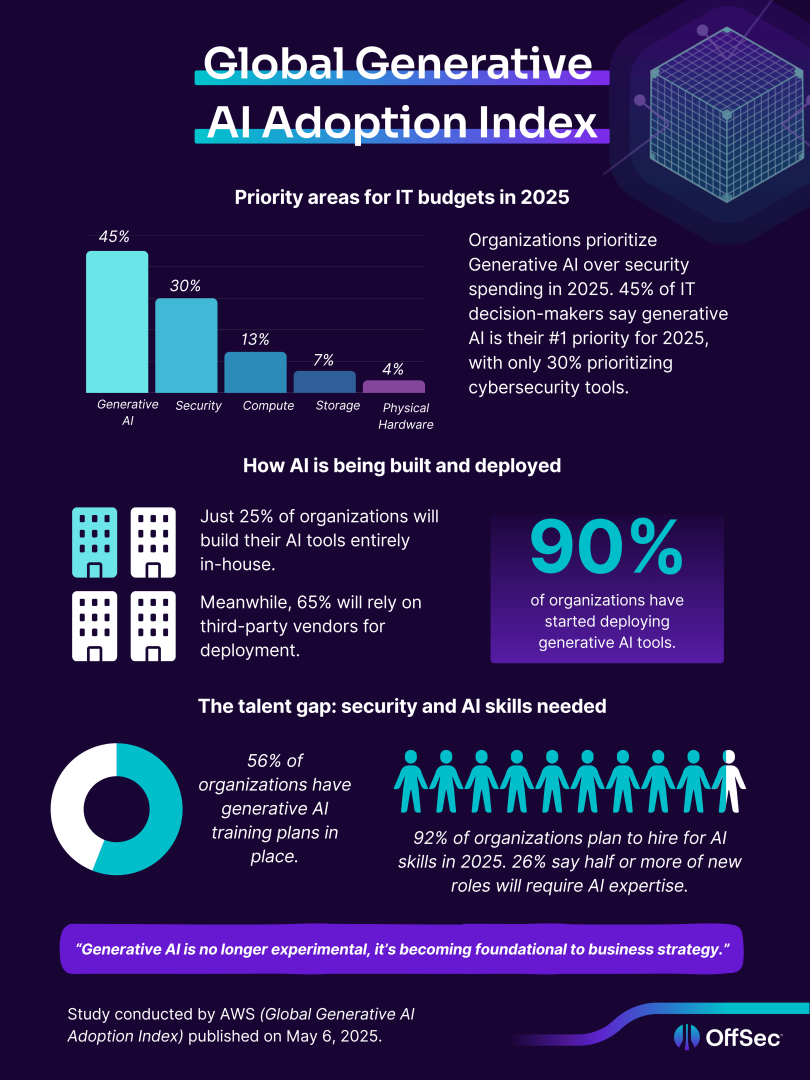

On May 6, 2025, AWS released the findings of its global Generative AI Adoption Index, revealing the scale and speed at which AI is reshaping enterprise technology. Based on responses from 3,739 senior IT decision-makers across nine countries—including the US, UK, India, and Japan—the study paints a clear picture: generative AI is no longer experimental; it’s becoming foundational to business strategy.

Industries represented in the study include financial services, ICT, manufacturing, and retail, offering a comprehensive view of how different sectors, especially highly regulated ones, are approaching this technological shift.

To review the full report, click here.

Key observations:

- A shift in spending priorities: In 2025, 45% of IT decision-makers are prioritizing generative AI in their tech budgets, making it the number one investment area. In contrast, 30% are putting cybersecurity tools at the top of their list, marking a significant shift in tech spending priorities.

- Widespread adoption: The Index highlights significant uptake of generative AI tools, with organizations leveraging these technologies to enhance productivity and innovation.

- Security implications: As generative AI becomes more prevalent, the attack surface for potential cyber threats expands. OffSec underscores the importance of integrating security considerations into AI development and deployment processes.

The AWS Generative AI Adoption Index provides valuable insights into the rapid integration of generative AI technologies across industries. As a leader in cybersecurity training and workforce development, OffSec recognizes the transformative potential of generative AI while emphasizing the critical need for robust security measures.

In response to the growing integration of generative AI, OffSec is enhancing its training to address the unique security challenges posed by these technologies. Our training is designed to provide hands-on experience in identifying and mitigating AI-related vulnerabilities, ensuring that professionals are well-prepared to secure AI-driven environments.

Security isn’t out: it’s just built in. In high-compliance industries like financial services and education, organizations place greater emphasis on the security and privacy features of AI tools, with 48% citing security as a key requirement when selecting generative AI platforms.

AI and security are converging. The report makes it clear that AI tools aren’t replacing security; they’re reshaping it. Responsible deployment, regulatory compliance, and safe automation depend on strong security foundations.

This is a reminder that innovation without protection isn’t really progress.

As generative AI reshapes business operations, the need for security-aware professionals is more urgent than ever. Whether you’re deploying LLMs in production or building internal AI tools, it’s critical to understand how to identify, exploit, and mitigate vulnerabilities in these systems.

Start your journey with OffSec’s LLM Red Teaming Learning Path — a hands-on, practical curriculum designed to equip cybersecurity professionals with the expertise to test and defend large language models (LLMs).

You’ll learn how to:

- Explore LLMs in depth, with a focus on their security implications

- Ethically engage with LLMs during security research

- Take a structured approach to understanding and attacking LLMs

- Enumerate and exploit vulnerabilities in and around LLMs

Get started with our LLM Red Teaming path!